Let's return to start of chapter 2 and explore the tools needed to

easily and efficiently work with various linguistic resources.

What are the most used and useful corpora? This is a difficult

question to answer because different problems will likely require

specific annotations and often a specific corpus. There are even

special conferences dedicated to corpus linguistics.

Here's a list of the most well-known general-purpose corpora:

- Brown Corpus - one of the first big corpora and the only one in the

list really easily accessible - we've already worked with it

in the first chapter

- Penn Treebank - Treebank is a corpus of sentences annotated with their

constituency parse trees so that they can be used to train and evaluate parsers

- Reuters Corpus (not to be confused with the ApteMod version provided with NLTK)

- British National Corpus (BNC) - a really huge corpus, but, unfortunately,

not freely available

Another very useful resource which isn't structured specifically as

academic corpora mentioned above, but at the same time has other

dimensions of useful connections and annotations is Wikipedia.

And there's being a lot of interesting linguistic research performed with it.

Besides there are two additional valuable language resources that

can't be classified as text corpora at all, but rather as language

databases: WordNet and Wiktionary. We have already discussed

CL-NLP interface to Wordnet. And we'll touch working with Wiktionary

in this part.

Processing corpora

All of the text corpora we'll encounter come as a set of files which

use some format, either custom or standard (like XML). So to be able

to access this data we'll need to implement reading and processing of

individual files and assembling these files into groups. Besides, as

some corpora are really large (for instance a zipped instance of the

Reuters corpus amounts to 1.5+ Gb), it is also useful to be able to

process the data one text at a time not detaining them in memory.

All this defines a rather simple protocol for corpora processing.

A corpus is just a list of texts, that can be arbitrary grouped.

(defstruct corpus

desc texts groups)

A text has a name and several representations: basic texts may

have a raw, a cleaned-up and a tokenized one. We'll also see texts with

other representations, like the parse trees for treebanks - we'll use

structure inheritance to describe them.

(defstruct text

name raw clean tokens)

The protocol itself comprises of 3 operations:

(defgeneric read-corpus (type path))

(defgeneric read-corpus-file (type source))

(defgeneric map-corpus (type path fn))

read-corpus creates a corpus object, and it uses read-corpus-file

to read individual files and return their contents in multiple forms,

potentially needed for further processing. map-corpus calls function

fn with every text produced from calling read-corpus-file on a

corpus in arbitrary order. This function works more like maphash

than mapcar, because it doesn't collect the results of applying

fn.

The methods of these functions are usually boring. For text-based

formats we implement read-corpus-file by reading each file's text

either in whole or line-by-line with the dolines macro, performing

tokenization and some post-processing. For XML-based variants we may

use SAX processing with Closure XML library.

Processing the NPS Chat corpus

Let's look at the NPS Chat corpus that is provided with NLTK. It has a

rather simple XML format. The entries are called Posts and have the

following structure:

<Post class="Emotion" user="10-19-30sUser2">10-19-30sUser11 lol

<terminals>

<t pos="NNP" word="10-19-30sUser11"/>

<t pos="UH" word="lol"/>

</terminals>

</Post>

We process each file by calling cxml:parse with an instance

of the nps-chat-sax class.

(defmethod read-corpus-file ((type (eql :nps-chat)) source)

(cxml:parse source (make 'nps-chat-sax)))

For this object we'll specialize the parser methods. It will also serve

as a storage for state of processing as SAX parsing operates with

callbacks that don't have access to the current context besides access

to the parser object.

(defclass nps-chat-sax (sax:sax-parser-mixin)

((texts :initform nil)

(tokens :initform nil)

(classes :initform nil)

(users :initform nil)

(cur-tag :initform nil)

(cur-tokens :initform nil)))

read-corpus-file will return a list of each post's contents, tokens,

as well as the list of posts' classes and users, which are indicated

in the meta attributes. Technically these values will be produced by

the sax:end-document method that is called at the end of SAX

processing:

(defmethod sax:end-document ((sax nps-chat-sax))

(with-slots (texts tokens users classes) sax

(values nil ;; raw text isn't useful for this corpus

(reverse texts)

(reverse tokens)

(reverse classes)

(reverse users))))

In sax:start-element method we store the current tag and record

attributes of each post or tokens, depending on the element.

(defmethod sax:start-element ((sax nps-chat-sax)

namespace-uri local-name qname attributes)

(with-slots (classes users cur-tokens cur-tag) sax

(case (setf cur-tag (mkeyw local-name))

(:post (push (attr "class" attributes) classes)

(push (attr "user" attributes) users))

(:t (push (make-token :word (attr "word" attributes)

:tag (mkeyw (attr "pos" attributes)))

cur-tokens)))))

In sax:characters we record current post's text:

(defmethod sax:characters ((sax nps-chat-sax) data)

(with-slots (cur-tag texts) sax

(when (eql :terminals cur-tag)

(push data texts))))

And in sax:end-element we dump current tokens:

(defmethod sax:end-element ((sax nps-chat-sax) namespace-uri

local-name qname)

(when (eql :terminals (mkeyw local-name))

(with-slots (tokens cur-tokens) sax

(push (reverse cur-tokens) tokens)

(setf cur-tokens nil))))

If you have used SAX parsing in some other language, like Python,

you should immediately recognize this approach and see

how it can translated to that language almost line-by-line.

Processing the Reuters corpus

A slightly more complex processing is required for the Reuters

corpus. The reason for that is that unlike with the Chat corpus that

we assumed to be inside a filesystem directory, it's not always

reasonable to extract this corpus as it's big and also is stored in

two-level zip archive with individual archives packed inside another

big archive. Extracting all of them is, obviously, tedious.

For such corpus it makes sense to resort to using map-corpus.

Here's a definition of its method for this corpus:

(defmethod map-corpus ((type (eql :reuters)) path fn)

(zip:with-zipfile (zip path)

(zip:do-zipfile-entries (name entry zip)

(unless (char= #\/ (last-char name))

(with-zipped-zip (in entry :raw t)

(mv-bind (_ text tokens __ ___ headline byline dateline)

(read-corpus-file :reuters in)

(declare (ignore _ __ ___))

(funcall fn (make-reuters-text

:name name

:clean text

:tokens tokens

:headline headline

:byline byline

:dateline dateline))))))))

It relies on read-corpus-file which processes individual

XML documents just like we saw early for the NPS Chat corpus.

But the documents are fed into it not by fad:walk-directory,

but with the help of the utilities, provided by David Lichteblau's

excellent zip library.

(zip:with-zipfile (zip path)

(zip:do-zipfile-entries (name entry zip)

(unless (char= #\/ (last-char name))

(with-zipped-zip (in entry :raw t)

In this snippet we open the zip file at path in with-zipfile and

iterate over its entries with do-zipfile-entries. These are usual

Lisp patterns to handle such kinds of resources. Yet inside the zip

file we find another layer of zip archives. To remove unnecessary

hassle and boilerplate I've added an additional macro with-zipped-zip.

It has to some extent to replicate the functionality of with-zipfile,

but it should take the data from the contents of one of the entries

of the already open zip file. Another twist is that it optionally

gives the possibility to access the raw unzipped binary stream,

not yet converted to a text representation - this is useful for CXML

which can work with such streams efficiently.

(defmacro with-zipped-zip ((name stream zipfile-entry

&key (external-format :utf-8) raw)

&body body)

(with-gensyms (zipstream v end entry entries zip x n)

;; here we transform the archives contents into an input stream

;; and then read it

`(flex:with-input-from-sequence

(,zipstream (zip:zipfile-entry-contents ,zipfile-entry))

(let ((,v (make-array (zip:zipfile-entry-size ,zipfile-entry)

:element-type '(unsigned-byte 8))))

(read-sequence ,v ,zipstream)

;; here we look for the central directory header

;; and move to it in the stream

(if-it (search #(80 75 5 6) ,v :from-end t)

(file-position ,zipstream it)

(error "end of central directory header not found"))

;; here we create a corresponding zipfile object for the entry

(let* ((,end (zip::make-end-header ,zipstream))

(,n (zip::end/total-files ,end))

(,entries (make-hash-table :test #'equal))

(,zip (zip::make-zipfile

:stream ,zipstream

:entries ,entries

:external-format ,external-format)))

(file-position ,zipstream

(zip::end/central-directory-offset ,end))

;; and here we read individual entries from the archive

(dotimes (,x ,n)

(let ((,entry (zip::read-entry-object ,zipstream

,external-format)))

(set# (zip:zipfile-entry-name ,entry) ,entries ,entry)))

;; finally, we're able to access entries in the same manner

;; as we'll do for the ordinary archive

(do-entries (,name ,stream ,zip

:external-format ,external-format :raw ,raw)

,@body))))))

As you see, this macro uses a lot of zip's internal symbols, as it

implements the similar function to the one which the library does,

but not anticipated by its author...

Let's see how it works:

NLP> (map-corpus :reuters "Reuters.zip"

#`(print (text-name %)))

"Reuters/Eng Lang_Disk 1/19960824.zip/12536newsML.xml"

"Reuters/Eng Lang_Disk 1/19960824.zip/12537newsML.xml"

...

Processing the Penn Treebank

The Penn Treebank is a very important corpus which is also not easy to

obtain: you have to order a CD from Linguistic Data Consortium (LDC) and pay

more than 1.5 thousand dollars for it. Yet, there are 2 workarounds:

- NLTK data provides 5% of the treebank, so you can use it for

experimenting with the data format

- There's a more recent corpus OntoNotes which includes the Penn

Treebank, and you also should obtain it from LDC, but it costs only

50$ (I've learned about its existence on StackOverflow, ordered it

and it indeed has a treebank inside, as well as a lot of other

useful linguistic annotations; the only thing I'm not sure about is

whether it is an exact copy of the Penn Treebank)

Let's see how to load the NLTK's treebank. The obvious thing about

treebanks is that they are basically Lisp code, so it should be very

easy to parse the data with Lisp. The only catch is that the treebank

doesn't obey Lisp's formatting rules for strings and doesn't

distinguish special characters like quote and pipe. So the task is to

properly handle all that.

In CL-NLP we have several utilities for dealing with trees: the

macros dotree and doleaves which execute arbitrary code for each

subtree or leaf in a tree for side-effects, and their counterpart

functions maptree and mapleaves that allow to create isomorphic

tree structures by applying a function to all tree's nodes or leaf nodes.

So, reading a treebanked tree will be performed in 2 steps:

first, we prepare the tree for loading by properly escaping everything:

(defun prepare-tree-for-reading (string)

(strjoin " " (mapcar #`(cond-it

((char= #\( (char % 0))

(cond-it

((member (sub % 1)

'("." "," ";" ":" "#" "''" "``")

:test 'string=)

(fmt "(|~A|" (sub % 1))))

((position #\| %)

(fmt "(|~A\\|~A|"

(sub % 1 it) (sub % (1+ it))))

(t %)))

((position #\) %)

(if (zerop it)

%

(fmt "\"~A\"~A" (sub % 0 it) (sub % it))))

(t (fmt "\"~A\"" %)))

(split-sequence-if #`(member % +white-chars+)

string :remove-empty-subseqs t)))

and then we read the tree with the Lisp reader and separately

collect its tokens:

(defmethod read-corpus-file ((type (eql :treebank)) file)

(let ((raw (string-trim +white-chars+ (read-file file))))

(with-input-from-string (in (prepare-tree-for-reading raw))

(loop

:for tree := (car (read in nil)) :while tree

:collect raw :into raws

:collect tree :into trees

:collect (let ((pos 0)

toks)

(dotree (subtree tree)

(when (and (listp subtree)

(single (cdr subtree))

(atom (cadr subtree)))

(let ((word (second subtree)))

(push (make-token

:beg pos

:end (1- (incf pos (1+ (length word))))

:word word

:tag (first subtree))

toks))))

(reverse toks))

:into tokens

:finally

(return (values (strjoin #\Newline raws)

(mapcar #`(strjoin #\Space

(mapcar #'token-word %))

tokens)

tokens

trees))))))

Other corpora

Most of the other corpora will use formats similar to the Brown,

Reuters Corpus, or Penn Treebank, so their readers may easily be

defined by analogy. Besides, the implementations of read-corpus methods

assume a certain way to present the corpus to the library: in a

filesystem directory, in a zipfile etc. Certainly, the corpora may

come in different form, but it will take just a couple of lines of

code changed to re-purpose the methods to handle a different

representation

Working with some other collections of documents presented in the NLTK

book, like Project Gutenberd collection of famous literary texts in

public domain or Inaugural speeches collection is just trivial - you

can see how it's done in our implementation of Chapter 1.

For instance, if we have Project Gutenberg collection in some directory dir,

we can process it by using the following pattern:

(fad:walk-directory

dir

#`(let ((text (string-trim +white-chars (read-file %))))

... do some processing on the raw text of the corpus ...

))

Working with Wikipedia and Wiktionary

Unlike most of academic corpora that are not so easy to obtain

Wikipedia and Wiktionary without a hassle give you access to full

dumps of their data in various formats: SQL, XML and text. For

instance, we can obtain the latest dump of English Wiktionary from

http://dumps.wikimedia.org/enwiktionary/.

I found it the most convenient to process them using the same XML SAX

parsing approach that was utilized for the Reuters and NPS Chat

corpora. The difference for them is that inside the XML documents a

special Mediawiki markup is used to add metadata. It is, in fact, a

heroic feat to parse this markup, because it doesn't have a clear,

precise spec - it's real spec is the PHP code of the Wikipedia's

parser,- and different people tend to (ab)use different variations of

annotations to express the same or similar things, as well as just to

use the markup in an untidy manner. I call such documents

semi-structured compared to unstructured raw text and structured XML

markup. Still, there's a lot of value in this annotation, because of

their crowdsourced nature that allows to capture much more information

than in most centralized efforts. Basically, there are 2 ways to work

with Mediawiki markup: using regexes or implementing the complete parser.

Below is a snippet of code, that uses the regex-based approach to

extract some information from the English Wiktionary - English word

definitions. In Wiktionary markup the definitions are inside each

language's section (denoted with ==Lang== marker, where Lang can

be English or some other language). The definitions themselves are

placed on their own lines and start with one of these markers: #,

#:, #*, #*:, #*::, * [[.

(defvar *defs* (list))

(defclass word-collector (sax:sax-parser-mixin)

((title :accessor title :initform nil)

(tag :accessor tag :initform nil)

(text :accessor text :initform ())

(itemcount :accessor itemcount :initform 0)

(tagcount :accessor tagcount :initform 0)))

(defmethod sax:end-element ((sax word-collector)

namespace-uri local-name qname)

(with-slots (title tag text) sax

(when (string= "text" local-name)

(let ((lang-marker "==English==")

(whole-text (strjoin "" (reverse text))))

(when-it (search lang-marker whole-text)

(let ((start (+ it (length lang-marker))))

(dolist (line (split-sequence

#\Newline (sub whole-text start

(re:scan "[^=]==[^=]" whole-text

:start start))))

(when (some #`(starts-with % line)

'("# " "#: " "#* " "#*: " "#*:: " "* [["))

(push (clean-words line) *defs*)

(incf (itemcount sax))))))))))))

(defun clean-words (line)

"Return a list of downcased words (only alpha-chars or hyphens

in the middle) found in LINE."

(mapcar #`(string-downcase (string-trim "-" %))

(remove-if #`(or (blankp %)

(loop :for char :across % :do

(unless (or (alpha-char-p char)

(char= #\- char))

(return t))))

(re:split +punctuation+ (raw-text line)))))

(defun raw-text (line)

"Return LINE without special-purpose Wiktionary chars."

(re:regex-replace-all "({[^}]+})|(\\[\\[)|(\\]\\])|('''?)"

(sub line (1+ (position #\Space line)))

""))

As the task is clearly defined and limited - extract just the text of definitions - it is possible to use regexes here:

- one regex to spot the interesting lines

- another one to filter out all the unnecessary markup.

Using corpora

Now, as we can already load and play with our corpora let's take a

look at some NLTK examples.

First, let's examine the Brown corpus. Here are its categories:

NLP> (ht-keys (corpus-groups *brown*))

(:PRESS-REPORTAGE :PRESS-EDITORIAL :PRESS-REVIEWS :RELIGION

:SKILL-AND-HOBBIES :POPULAR-LORE :BELLES-LETTRES

:MISCELLANEOUS-GOVERNMENT-HOUSE-ORGANS :LEARNED :FICTION-GENERAL

:FICTION-MYSTERY :FICTION-SCIENCE :FICTION-ADVENTURE :FICTION-ROMANCE

:HUMOR)

As you see, there is no "news" category which is mentioned in the NLTK example. Probably, what they refer to is :press-reportage.

NLP> (take 9 (mapcar #'token-word

(flatten (mapcar #'text-tokens

(get# :press-reportage

(corpus-groups *brown*))))))

("The" "Fulton" "County" "Grand" "Jury" "said" "Friday" "an" "investigation")

NLP> (take 9 (mapcar #'token-word

(flatten

(mapcar #'text-tokens

(remove-if-not #`(string= "cg22" (text-name %))

(corpus-texts *brown*))))))

("Does" "our" "society" "have" "a" "runaway" "," "uncontrollable" "growth")

NLP> (setf *print-length* 3)

NLP> (mapcan #`(ncorpus::sentences-from-tokens (text-tokens %))

(get# :press-reportage (corpus-groups *brown*)))

((#<The/at 0..3> #<Fulton/np-tl 4..10> #<County/nn-tl 11..17> ...)

(#<The/at 166..169> #<jury/nn 170..174> #<further/rbr 175..182> ...)

(#<The/at 409..412> #<September-October/np 413..430> #<term/nn 431..435> ...)

...)

The function sentences-from-tokens here operates under the

assumption, that every token with . tag is ending a sentence, and it

splits the sentences on one or more such tokens.

The presented code may seam much more elaborate, than the NLTK version:

>>> brown.words(categories='news')

['The', 'Fulton', 'County', 'Grand', 'Jury', 'said', ...]

>>> brown.words(fileids=['cg22'])

['Does', 'our', 'society', 'have', 'a', 'runaway', ',', ...]

>>> brown.sents(categories=['news', 'editorial', 'reviews'])

[['The', 'Fulton', 'County'...], ['The', 'jury', 'further'...], ...]

Yet, it should be understood, that it is easy to add a ton of various

utility accessors, like it is done in NLTK, but they will inevitable

make the code more complex and harder to maintain. And the question

is, how frequently are they going to be used and what we will miss anyway?

Coding such utilities is very pleasant and easy using the

functional style, as shown above, so they are left outside of the

scope of cl-nlp, at least until proven essential...

(NB. Don't forget to set *print-length* back to nil).

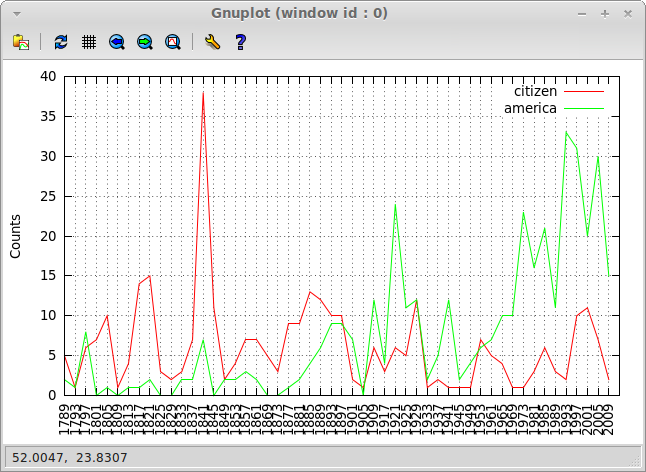

Next, we'll be once again looking at frequency distributions. First,

we need to build an ngram index from Brown corpus words in "news"

category. In chapter 1 we've already done a very similar thing:

NLP> (index-ngrams

1 (mapcar #'token-word

(flatten (mapcar #'text-tokens

(get# :press-reportage

(corpus-groups *brown*))))))

#<TABLE-NGRAMS order:1 count:14395 outcomes:108130 {101C2B57E3}>

NLP> (defvar *news-1grams* *) ;; * is the previous returned value

NLP> (dolist (m '("can" "could" "may" "might" "must" "will"))

(format t "~A: ~A " m (freq *news-1grams* m)))

can: 93 could: 86 may: 66 might: 38 must: 50 will: 389

Funny, some numbers don't add up to what there's in NLTK:

can: 94 could: 87 may: 93 might: 38 must: 53 will: 389

Well, we can double-check them from a different direction:

NLP> (length (remove-if-not

#`(string= "can" %)

(mapcar #'token-word

(flatten (mapcar #'text-tokens

(get# :press-reportage

(corpus-groups *brown*)))))))

93

Looks like our calculation is precise.

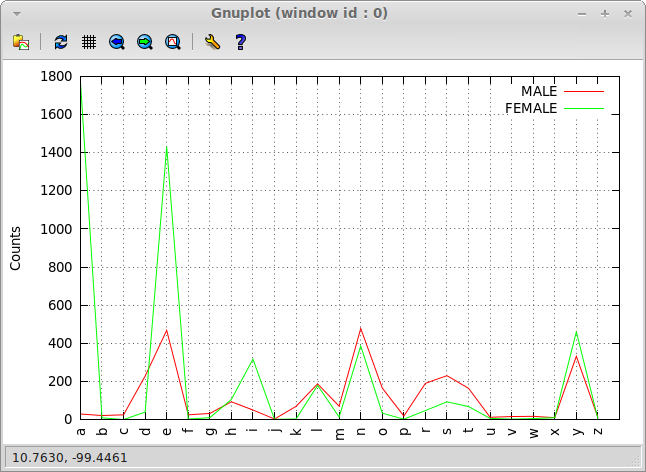

Next is conditional frequency distribution, but we'll leave this topic

for the next part that is fully dedicated to that.

So far so good. We have examined common approaches to handling various

corpora. There are, basically, 2 main things you need to do with them:

load & parse and filter for some interesting information. The loading

for most corpora will be performed either with some regexes on raw

strings, with XML SAX parsing, or using the Lisp reader. And for

filtering Lisp offers a great versatile toolset of higher-order

functions: mapcar, remove-if-not, position, and reduce -

to name a few.